This is one of the following six articles on 2-Independent-Sample Unpooled t-Tests in Excel

2-Independent-Sample Unpooled t-Test in 4 Steps in Excel 2010 and Excel 2013

Variance Tests: Levene’s Test, Brown-Forsythe Test, and F-Test in Excel For 2-Sample Unpooled t-Test

2-Sample Unpooled t-Test Excel Calculations, Formulas, and Tools

Effect Size for a 2-Independent-Sample Unpooled t-Test in Excel 2010 and Excel 2013

Test Power of a 2-Independent Sample Unpooled t-Test With G-Power Utility

Excel Normality Tests

Kolmogorov-Smirnov,

Anderson-Darling,

Shapiro- Wilk Tests For

2-Sample Unpooled

t-Test

The following five normality tests will be performed on the sample data here:

An Excel histogram of the sample data will be created.

A normal probability plot of the sample data will be created in Excel.

The Kolmogorov-Smirnov test for normality of the sample data will be performed in Excel.

The Anderson-Darling test for normality of the sample data will be performed in Excel.

The Shapiro-Wilk test for normality of the sample data will be performed in Excel.

The quickest way to evaluate normality of a sample is to construct an Excel histogram from the sample data.

Histogram in Excel

Excel histograms of both sample groups are as follows:

(Click on Image To See a Larger Version)

(Click on Image To See a Larger Version)

To create this histogram in Excel, fill in the Excel Histogram dialogue box as follows:

(Click on Image To See a Larger Version)

(Click on Image To See a Larger Version)

(Click on Image To See a Larger Version)

(Click on Image To See a Larger Version)

To create this histogram in Excel, fill in the Excel Histogram dialogue box as follows:

(Click on Image To See a Larger Version)

(Click on Image To See a Larger Version)

Both sample groups appear to be distributed reasonably closely to the bell-shaped normal distribution. It should be noted that bin size in an Excel histogram is manually set by the user. This arbitrary setting of the bin sizes can has a significant influence on the shape of the histogram’s output. Different bin sizes could result in an output that would not appear bell-shaped at all. What is actually set by the user in an Excel histogram is the upper boundary of each bin.

Normal Probability Plot in Excel

Another way to graphically evaluate normality of each data sample is to create a normal probability plot for each sample group. This can be implemented in Excel and appears as follows:

(Click on Image To See a Larger Version)

(Click on Image To See a Larger Version)

(Click on Image To See a Larger Version)

(Click on Image To See a Larger Version)

Normal probability plots for both sample groups show that the data appears to be very close to being normally distributed. The actual sample data (red) matches very closely the data values of the sample were perfectly normally distributed (blue) and never goes beyond the 95 percent confidence interval boundaries (green).

Kolmogorov-Smirnov Test

For Normality in Excel

The Kolmogorov-Smirnov Test is a hypothesis test that is widely used to determine whether a data sample is normally distributed. The Kolmogorov-Smirnov Test calculates the distance between the Cumulative Distribution Function (CDF) of each data point and what the CDF of that data point would be if the sample were perfectly normally distributed. The Null Hypothesis of the Kolmogorov-Smirnov Test states that the distribution of actual data points matches the distribution that is being tested. In this case the data sample is being compared to the normal distribution.

The largest distance between the CDF of any data point and its expected CDF is compared to Kolmogorov-Smirnov Critical Value for a specific sample size and Alpha. If this largest distance exceeds the Critical Value, the Null Hypothesis is rejected and the data sample is determined to have a different distribution than the tested distribution. If the largest distance does not exceed the Critical Value, we cannot reject the Null Hypothesis, which states that the sample has the same distribution as the tested distribution.

F(Xk) = CDF(Xk) for normal distribution

F(Xk) = NORM.DIST(Xk, Sample Mean, Sample Stan. Dev., TRUE)

Variable 1 – Shift A Units Produced

0.0938 = Max Difference Between Actual and Expected CDF

20 = n = Number of Data Points

0.05 = α

Variable 2 - Shift B Units Produced

(Click on Image To See a Larger Version)

(Click on Image To See a Larger Version)

0.1212 = Max Difference Between Actual and Expected CDF

17 = n = Number of Data Points

0.05 = α

(Click on Image To See a Larger Version)

(Click on Image To See a Larger Version)

The Null Hypothesis Stating That the Data Are Normally Distributed Cannot Be Rejected

The Null Hypothesis for the Kolmogorov-Smirnov Test for Normality, which states that the sample data are normally distributed, is rejected if the maximum difference between the expected and actual CDF of any of the data points exceed the Critical Value for the given n and α.

The Max Difference Between the Actual and Expected CDF for Variable 1 (0.0938) and for Variable 2 (0.1212) are significantly less than the Kolmogorov-Smirnov Critical Value for n = 20 (0.29) and for n = 15 (0.34) at α = 0.05 so the Null Hypotheses of the Kolmogorov-Smirnov Test of each of the two sample groups is accepted.

Anderson-Darling Test

For Normality in Excel

The Anderson-Darling Test is a hypothesis test that is widely used to determine whether a data sample is normally distributed. The Anderson-Darling Test calculates a test statistic based upon the actual value of each data point and the Cumulative Distribution Function (CDF) of each data point if the sample were perfectly normally distributed.

The Anderson-Darling Test is considered to be slightly more powerful than the Kolmogorov-Smirnov test for the following two reasons:

The Kolmogorov-Smirnov test is distribution-free. i.e., its critical values are the same for all distributions tested. The Anderson-darling tests requires critical values calculated for each tested distribution and is therefore more sensitive to the specific distribution.

The Anderson-Darling test gives more weight to values in the outer tails than the Kolmogorov-Smirnov test. The K-S test is less sensitive to aberration in outer values than the A-D test.

If the test statistic exceeds the Anderson-Darling Critical Value for a given Alpha, the Null Hypothesis is rejected and the data sample is determined to have a different distribution than the tested distribution. If the test statistic does not exceed the Critical Value, we cannot reject the Null Hypothesis, which states that the sample has the same distribution as the tested distribution.

F(Xk) = CDF(Xk) for normal distribution

F(Xk) = NORM.DIST(Xk, Sample Mean, Sample Stan. Dev., TRUE)

Variable 1 - Shift A Units Produced

(Click on Image To See a Larger Version)

(Click on Image To See a Larger Version)

Adjusted Test Statistic A* = 0.253

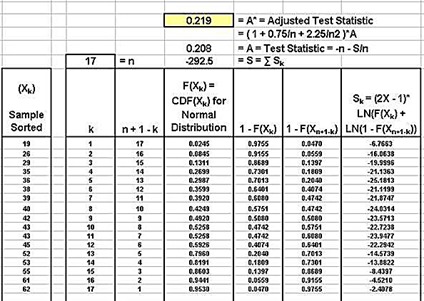

Variable 2 - Shift B Units Produced

Adjusted Test Statistic A* = 0.219

Reject the Null Hypothesis of the Anderson-Darling Test which states that the data are normally distributed if any the following are true:

A* > 0.576 When Level of Significance (α) = 0.15

A* > 0.656 When Level of Significance (α) = 0.10

A* > 0.787 When Level of Significance (α) = 0.05

A* > 1.092 When Level of Significance (α) = 0.01

The Null Hypothesis Stating That the Data Are Normally Distributed Cannot Be Rejected

The Null Hypothesis for the Anderson-Darling Test for Normality, which states that the sample data are normally distributed, is rejected if the Adjusted Test Statistic (A*) exceeds the Critical Value for the given n and α.

The Adjusted Test Statistic (A*) for Variable 1 (0.253) and for Variable 2 (0.219) are significantly less than the Anderson-Darling Critical Value for α = 0.05 so the Null Hypotheses of the Anderson-Darling Test for each of the two sample groups is accepted.

Shapiro-Wilk Test For Normality

in Excel

The Shapiro-Wilk Test is a hypothesis test that is widely used to determine whether a data sample is normally distributed. A test statistic W is calculated. If this test statistic is less than a critical value of W for a given level of significance (alpha) and sample size, the Null Hypothesis which states that the sample is normally distributed is rejected.

The Shapiro-Wilk Test is a robust normality test and is widely-used because of its slightly superior performance against other normality tests, especially with small sample sizes. Superior performance means that it correctly rejects the Null Hypothesis that the data are not normally distributed a slightly higher percentage of times than most other normality tests, particularly at small sample sizes.

The Shapiro-Wilk normality test is generally regarded as being slightly more powerful than the Anderson-Darling normality test, which in turn is regarded as being slightly more powerful than the Kolmogorov-Smirnov normality test.

Variable 1 - Shift A Units Produced

(Click on Image To See a Larger Version)

(Click on Image To See a Larger Version)

0.966538 = Test Statistic W

0.905 = W Critical for the following n and Alpha

20 = n = Number of Data Points

0.05 = α

The Null Hypothesis Stating That the Data Are Normally Distributed Cannot Be Rejected

Test Statistic W (0.966538) is larger than W Critical 0.905. The Null Hypothesis therefore cannot be rejected. There is not enough evidence to state that the data are not normally distributed with a confidence level of 95 percent.

Variable 2 - Shift B Units Produced

(Click on Image To See a Larger Version)

(Click on Image To See a Larger Version)

0.974736 = Test Statistic W

0.892 = W Critical for the following n and Alpha

17 = n = Number of Data Points

0.05 = α

The Null Hypothesis Stating That the Data Are Normally Distributed Cannot Be Rejected

Test Statistic W (0.974736) is larger than W Critical 0.892. The Null Hypothesis therefore cannot be rejected. There is not enough evidence to state that the data are not normally distributed with a confidence level of 95 percent.

Correctable Reasons That Normal

Data Can Appear Non-Normal

If a normality test indicates that data are not normally distributed, it is a good idea to do a quick evaluation of whether any of the following factors have caused normally-distributed data to appear to be non-normally-distributed:

1) Outliers

– Too many outliers can easily skew normally-distributed data. An outlier can oftwenty be removed if a specific cause of its extreme value can be identified. Some outliers are expected in normally-distributed data.

2) Data Has Been Affected by More Than One Process

– Variations to a process such as shift changes or operator changes can change the distribution of data. Multiple modal values in the data are common indicators that this might be occurring. The effects of different inputs must be identified and eliminated from the data.

3) Not Enough Data

– Normally-distributed data will often not assume the appearance of normality until at least 25 data points have been sampled.

4) Measuring Devices Have Poor Resolution

– Sometimes (but not always) this problem can be solved by using a larger sample size.

5) Data Approaching Zero or a Natural Limit

– If a large number of data values approach a limit such as zero, calculations using very small values might skew computations of important values such as the mean. A simple solution might be to raise all the values by a certain amount.

6) Only a Subset of a Process’ Output Is Being Analyzed

– If only a subset of data from an entire process is being used, a representative sample in not being collected. Normally-distributed results would not appear normally distributed if a representative sample of the entire process is not collected.

Nonparametric Alternatives to the

Two-Independent-Sample,

Unpooled t-Test in Excel

When normality of data cannot be confirmed for a small sample, it is necessary to substitute a nonparametric test for a t-Test. Nonparametric tests do not have the same normality requirement that the t-Test does. The most common nonparametric test that can be substituted for the two-independent-sample t-Test when data normality cannot be confirmed is the Mann-Whitney U Test.

A Mann-Whitney U test was performed on the data in a blog article that was part of the section covering the pooled, two-independent-sample t-Test but will not be performed on this data used in the unpooled, two-independent-sample t-Test. The procedure for the Mann-Whitney U test is exactly the same for pooled and unpooled two-independent sample t-Tests.

Excel Master Series Blog Directory

Statistical Topics and Articles In Each Topic

- Histograms in Excel

- Bar Chart in Excel

- Combinations & Permutations in Excel

- Normal Distribution in Excel

- Overview of the Normal Distribution

- Normal Distribution’s PDF (Probability Density Function) in Excel 2010 and Excel 2013

- Normal Distribution’s CDF (Cumulative Distribution Function) in Excel 2010 and Excel 2013

- Solving Normal Distribution Problems in Excel 2010 and Excel 2013

- Overview of the Standard Normal Distribution in Excel 2010 and Excel 2013

- An Important Difference Between the t and Normal Distribution Graphs

- The Empirical Rule and Chebyshev’s Theorem in Excel – Calculating How Much Data Is a Certain Distance From the Mean

- Demonstrating the Central Limit Theorem In Excel 2010 and Excel 2013 In An Easy-To-Understand Way

- t-Distribution in Excel

- Binomial Distribution in Excel

- z-Tests in Excel

- Overview of Hypothesis Tests Using the Normal Distribution in Excel 2010 and Excel 2013

- One-Sample z-Test in 4 Steps in Excel 2010 and Excel 2013

- 2-Sample Unpooled z-Test in 4 Steps in Excel 2010 and Excel 2013

- Overview of the Paired (Two-Dependent-Sample) z-Test in 4 Steps in Excel 2010 and Excel 2013

- t-Tests in Excel

- Overview of t-Tests: Hypothesis Tests that Use the t-Distribution

- 1-Sample t-Tests in Excel

- 1-Sample t-Test in 4 Steps in Excel 2010 and Excel 2013

- Excel Normality Testing For the 1-Sample t-Test in Excel 2010 and Excel 2013

- 1-Sample t-Test – Effect Size in Excel 2010 and Excel 2013

- 1-Sample t-Test Power With G*Power Utility

- Wilcoxon Signed-Rank Test in 8 Steps As a 1-Sample t-Test Alternative in Excel 2010 and Excel 2013

- Sign Test As a 1-Sample t-Test Alternative in Excel 2010 and Excel 2013

- 2-Independent-Sample Pooled t-Tests in Excel

- 2-Independent-Sample Pooled t-Test in 4 Steps in Excel 2010 and Excel 2013

- Excel Variance Tests: Levene’s, Brown-Forsythe, and F Test For 2-Sample Pooled t-Test in Excel 2010 and Excel 2013

- Excel Normality Tests Kolmogorov-Smirnov, Anderson-Darling, and Shapiro Wilk Tests For Two-Sample Pooled t-Test

- Two-Independent-Sample Pooled t-Test - All Excel Calculations

- 2- Sample Pooled t-Test – Effect Size in Excel 2010 and Excel 2013

- 2-Sample Pooled t-Test Power With G*Power Utility

- Mann-Whitney U Test in 12 Steps in Excel as 2-Sample Pooled t-Test Nonparametric Alternative in Excel 2010 and Excel 2013

- 2- Sample Pooled t-Test = Single-Factor ANOVA With 2 Sample Groups

- 2-Independent-Sample Unpooled t-Tests in Excel

- 2-Independent-Sample Unpooled t-Test in 4 Steps in Excel 2010 and Excel 2013

- Variance Tests: Levene’s Test, Brown-Forsythe Test, and F-Test in Excel For 2-Sample Unpooled t-Test

- Excel Normality Tests Kolmogorov-Smirnov, Anderson-Darling, and Shapiro-Wilk For 2-Sample Unpooled t-Test

- 2-Sample Unpooled t-Test Excel Calculations, Formulas, and Tools

- Effect Size for a 2-Independent-Sample Unpooled t-Test in Excel 2010 and Excel 2013

- Test Power of a 2-Independent Sample Unpooled t-Test With G-Power Utility

- Paired (2-Sample Dependent) t-Tests in Excel

- Paired t-Test in 4 Steps in Excel 2010 and Excel 2013

- Excel Normality Testing of Paired t-Test Data

- Paired t-Test Excel Calculations, Formulas, and Tools

- Paired t-Test – Effect Size in Excel 2010, and Excel 2013

- Paired t-Test – Test Power With G-Power Utility

- Wilcoxon Signed-Rank Test in 8 Steps As a Paired t-Test Alternative

- Sign Test in Excel As A Paired t-Test Alternative

- Hypothesis Tests of Proportion in Excel

- Hypothesis Tests of Proportion Overview (Hypothesis Testing On Binomial Data)

- 1-Sample Hypothesis Test of Proportion in 4 Steps in Excel 2010 and Excel 2013

- 2-Sample Pooled Hypothesis Test of Proportion in 4 Steps in Excel 2010 and Excel 2013

- How To Build a Much More Useful Split-Tester in Excel Than Google's Website Optimizer

- Chi-Square Independence Tests in Excel

- Chi-Square Goodness-Of-Fit Tests in Excel

- F Tests in Excel

- Correlation in Excel

- Pearson Correlation in Excel

- Spearman Correlation in Excel

- Confidence Intervals in Excel

- z-Based Confidence Intervals of a Population Mean in 2 Steps in Excel 2010 and Excel 2013

- t-Based Confidence Intervals of a Population Mean in 2 Steps in Excel 2010 and Excel 2013

- Minimum Sample Size to Limit the Size of a Confidence interval of a Population Mean

- Confidence Interval of Population Proportion in 2 Steps in Excel 2010 and Excel 2013

- Min Sample Size of Confidence Interval of Proportion in Excel 2010 and Excel 2013

- Simple Linear Regression in Excel

- Overview of Simple Linear Regression in Excel 2010 and Excel 2013

- Complete Simple Linear Regression Example in 7 Steps in Excel 2010 and Excel 2013

- Residual Evaluation For Simple Regression in 8 Steps in Excel 2010 and Excel 2013

- Residual Normality Tests in Excel – Kolmogorov-Smirnov Test, Anderson-Darling Test, and Shapiro-Wilk Test For Simple Linear Regression

- Evaluation of Simple Regression Output For Excel 2010 and Excel 2013

- All Calculations Performed By the Simple Regression Data Analysis Tool in Excel 2010 and Excel 2013

- Prediction Interval of Simple Regression in Excel 2010 and Excel 2013

- Multiple Linear Regression in Excel

- Basics of Multiple Regression in Excel 2010 and Excel 2013

- Complete Multiple Linear Regression Example in 6 Steps in Excel 2010 and Excel 2013

- Multiple Linear Regression’s Required Residual Assumptions

- Normality Testing of Residuals in Excel 2010 and Excel 2013

- Evaluating the Excel Output of Multiple Regression

- Estimating the Prediction Interval of Multiple Regression in Excel

- Regression - How To Do Conjoint Analysis Using Dummy Variable Regression in Excel

- Logistic Regression in Excel

- Logistic Regression Overview

- Logistic Regression in 6 Steps in Excel 2010 and Excel 2013

- R Square For Logistic Regression Overview

- Excel R Square Tests: Nagelkerke, Cox and Snell, and Log-Linear Ratio in Excel 2010 and Excel 2013

- Likelihood Ratio Is Better Than Wald Statistic To Determine if the Variable Coefficients Are Significant For Excel 2010 and Excel 2013

- Excel Classification Table: Logistic Regression’s Percentage Correct of Predicted Results in Excel 2010 and Excel 2013

- Hosmer- Lemeshow Test in Excel – Logistic Regression Goodness-of-Fit Test in Excel 2010 and Excel 2013

- Single-Factor ANOVA in Excel

- Overview of Single-Factor ANOVA

- Single-Factor ANOVA in 5 Steps in Excel 2010 and Excel 2013

- Shapiro-Wilk Normality Test in Excel For Each Single-Factor ANOVA Sample Group

- Kruskal-Wallis Test Alternative For Single Factor ANOVA in 7 Steps in Excel 2010 and Excel 2013

- Levene’s and Brown-Forsythe Tests in Excel For Single-Factor ANOVA Sample Group Variance Comparison

- Single-Factor ANOVA - All Excel Calculations

- Overview of Post-Hoc Testing For Single-Factor ANOVA

- Tukey-Kramer Post-Hoc Test in Excel For Single-Factor ANOVA

- Games-Howell Post-Hoc Test in Excel For Single-Factor ANOVA

- Overview of Effect Size For Single-Factor ANOVA

- ANOVA Effect Size Calculation Eta Squared in Excel 2010 and Excel 2013

- ANOVA Effect Size Calculation Psi – RMSSE – in Excel 2010 and Excel 2013

- ANOVA Effect Size Calculation Omega Squared in Excel 2010 and Excel 2013

- Power of Single-Factor ANOVA Test Using Free Utility G*Power

- Welch’s ANOVA Test in 8 Steps in Excel Substitute For Single-Factor ANOVA When Sample Variances Are Not Similar

- Brown-Forsythe F-Test in 4 Steps in Excel Substitute For Single-Factor ANOVA When Sample Variances Are Not Similar

- Two-Factor ANOVA With Replication in Excel

- Two-Factor ANOVA With Replication in 5 Steps in Excel 2010 and Excel 2013

- Variance Tests: Levene’s and Brown-Forsythe For 2-Factor ANOVA in Excel 2010 and Excel 2013

- Shapiro-Wilk Normality Test in Excel For 2-Factor ANOVA With Replication

- 2-Factor ANOVA With Replication Effect Size in Excel 2010 and Excel 2013

- Excel Post Hoc Tukey’s HSD Test For 2-Factor ANOVA With Replication

- 2-Factor ANOVA With Replication – Test Power With G-Power Utility

- Scheirer-Ray-Hare Test Alternative For 2-Factor ANOVA With Replication

- Two-Factor ANOVA Without Replication in Excel

- Randomized Block Design ANOVA in Excel

- Repeated-Measures ANOVA in Excel

- Single-Factor Repeated-Measures ANOVA in 4 Steps in Excel 2010 and Excel 2013

- Sphericity Testing in 9 Steps For Repeated Measures ANOVA in Excel 2010 and Excel 2013

- Effect Size For Repeated-Measures ANOVA in Excel 2010 and Excel 2013

- Friedman Test in 3 Steps For Repeated-Measures ANOVA in Excel 2010 and Excel 2013

- ANCOVA in Excel

- Creating Interactive Graphs of Statistical Distributions in Excel

- Interactive Statistical Distribution Graph in Excel 2010 and Excel 2013

- Interactive Graph of the Normal Distribution in Excel 2010 and Excel 2013

- Interactive Graph of the Chi-Square Distribution in Excel 2010 and Excel 2013

- Interactive Graph of the t-Distribution in Excel 2010 and Excel 2013

- Interactive Graph of the t-Distribution’s PDF in Excel 2010 and Excel 2013

- Interactive Graph of the t-Distribution’s CDF in Excel 2010 and Excel 2013

- Interactive Graph of the Binomial Distribution in Excel 2010 and Excel 2013

- Interactive Graph of the Exponential Distribution in Excel 2010 and Excel 2013

- Interactive Graph of the Beta Distribution in Excel 2010 and Excel 2013

- Interactive Graph of the Gamma Distribution in Excel 2010 and Excel 2013

- Interactive Graph of the Poisson Distribution in Excel 2010 and Excel 2013

- Solving Problems With Other Distributions in Excel

- Solving Uniform Distribution Problems in Excel 2010 and Excel 2013

- Solving Multinomial Distribution Problems in Excel 2010 and Excel 2013

- Solving Exponential Distribution Problems in Excel 2010 and Excel 2013

- Solving Beta Distribution Problems in Excel 2010 and Excel 2013

- Solving Gamma Distribution Problems in Excel 2010 and Excel 2013

- Solving Poisson Distribution Problems in Excel 2010 and Excel 2013

- Optimization With Excel Solver

- Maximizing Lead Generation With Excel Solver

- Minimizing Cutting Stock Waste With Excel Solver

- Optimal Investment Selection With Excel Solver

- Minimizing the Total Cost of Shipping From Multiple Points To Multiple Points With Excel Solver

- Knapsack Loading Problem in Excel Solver – Optimizing the Loading of a Limited Compartment

- Optimizing a Bond Portfolio With Excel Solver

- Travelling Salesman Problem in Excel Solver – Finding the Shortest Path To Reach All Customers

- Chi-Square Population Variance Test in Excel

- Analyzing Data With Pivot Tables

- SEO Functions in Excel

- Time Series Analysis in Excel

- VLOOKUP

No comments:

Post a Comment